- AI Platforms

Fueled by a Visual Designer, Marketplace for Reusable Connectors, ETL & ML Blocks.

Fueled by a Visual Designer, Marketplace for Reusable Connectors, ETL & ML Blocks.

Agentic AI Integrations

Integration enables agents to deliver AI outcomes faster, better, simpler.

DataNimbus FinHub

With the DataNimbus FinHub platform, we offer a whole range of escrow management.Navigate Your AI Journey

Break free from legacy! Modernize your core with intelligent, AI-powered solutions

- AI Applications

- Services

DataNimbus Consulting

- Data AI Strategy

- Data and AI Architecture

- Payment Automation

Product Engineering Services

- Product Design & UX

- Custom APIs

- AI enabled Applications

Data Analytics & AI/ML

- Data Management

- AI Applications and Models

- Custom Workflows

Enterprise Integration

- Custom API

- Process Orchestration

- Intelligent Process Orchestration Management

- Customers

- Resources

- Company

- AI Platforms

Fueled by a Visual Designer, Marketplace for Reusable Connectors, ETL & ML Blocks.

Fueled by a Visual Designer, Marketplace for Reusable Connectors, ETL & ML Blocks.

Agentic AI Integrations

Integration enables agents to deliver AI outcomes faster, better, simpler.

DataNimbus FinHub

With the DataNimbus FinHub platform, we offer a whole range of escrow management.Navigate Your AI Journey

Break free from legacy! Modernize your core with intelligent, AI-powered solutions

- AI Applications

- Services

DataNimbus Consulting

- Data AI Strategy

- Data and AI Architecture

- Payment Automation

Product Engineering Services

- Product Design & UX

- Custom APIs

- AI enabled Applications

Data Analytics & AI/ML

- Data Management

- AI Applications and Models

- Custom Workflows

Enterprise Integration

- Custom API

- Process Orchestration

- Intelligent Process Orchestration Management

- Customers

- Resources

- Company

- AI Platforms

Fueled by a Visual Designer, Marketplace for Reusable Connectors, ETL & ML Blocks.

Fueled by a Visual Designer, Marketplace for Reusable Connectors, ETL & ML Blocks.

Agentic AI Integrations

Integration enables agents to deliver AI outcomes faster, better, simpler.

DataNimbus FinHub

With the DataNimbus FinHub platform, we offer a whole range of escrow management.Navigate Your AI Journey

Break free from legacy! Modernize your core with intelligent, AI-powered solutions

- AI Applications

- Services

DataNimbus Consulting

- Data AI Strategy

- Data and AI Architecture

- Payment Automation

Product Engineering Services

- Product Design & UX

- Custom APIs

- AI enabled Applications

Data Analytics & AI/ML

- Data Management

- AI Applications and Models

- Custom Workflows

Enterprise Integration

- Custom API

- Process Orchestration

- Intelligent Process Orchestration Management

- Customers

- Resources

- Company

- AI Platforms

Fueled by a Visual Designer, Marketplace for Reusable Connectors, ETL & ML Blocks.

Fueled by a Visual Designer, Marketplace for Reusable Connectors, ETL & ML Blocks.

Agentic AI Integrations

Integration enables agents to deliver AI outcomes faster, better, simpler.

DataNimbus FinHub

With the DataNimbus FinHub platform, we offer a whole range of escrow management.Navigate Your AI Journey

Break free from legacy! Modernize your core with intelligent, AI-powered solutions

- AI Applications

- Services

DataNimbus Consulting

- Data AI Strategy

- Data and AI Architecture

- Payment Automation

Product Engineering Services

- Product Design & UX

- Custom APIs

- AI enabled Applications

Data Analytics & AI/ML

- Data Management

- AI Applications and Models

- Custom Workflows

Enterprise Integration

- Custom API

- Process Orchestration

- Intelligent Process Orchestration Management

- Customers

- Resources

- Company

Blog

Standardize Data Pipeline Development with Flexibility: How DataNimbus Designer Makes It Easy

Introduction:

In today’s fast-moving digital landscape, organizations rely on data pipelines to power dashboards, fuel machine learning models, and unlock actionable insights. However, pipeline development often presents challenges related to speed, reusability, and governance, particularly as teams expand.

Databricks offers a powerful foundation for scalable data processing and advanced analytics. What teams need next is a way to standardize pipeline development while enabling customization and faster delivery, without increasing complexity.

October 2025 | Blog

Why Traditional Data Pipelines Struggle with Scale and Agility

Traditional data engineering pipelines often hit a wall when organizations need to scale. These pipelines are typically code-heavy, requiring specialized programming skills that create bottlenecks in team workflows. When team members change, these custom-built pipelines become maintenance nightmares, with tribal knowledge walking out the door. Additionally, most traditional pipelines lack reusability, forcing developers to repeatedly rewrite similar logic across different projects. Perhaps most critically, they offer limited governance capabilities, providing minimal visibility into who modified what, when changes occurred, and why they were implemented.

The Need for Reusability and Customization

Modern data engineering requires more than just moving data from point A to point B. Today’s data teams need solutions that allow them to:

- Standardize common processes

- Maintain flexibility for unique business requirements

- Enable collaboration between technical engineers and business analysts

- Ensure proper governance and troubleshooting capabilitie

The balance between standardization and customization is precisely where reusable blocks and custom code support become essential. DataNimbus Designer elevates both capabilities to first-class citizens in the pipeline development process, allowing teams to build consistent, maintainable, and flexible data pipelines.

DataNimbus Designer: An Overview

DataNimbus Designer is a comprehensive visual orchestration tool purpose-built to run on top of Databricks. It provides users with an intuitive interface to build and orchestrate data workflows without sacrificing the power or flexibility that data engineers demand.

The platform’s core strength lies in its feature-rich environment tailored specifically for data pipeline development. DataNimbus Designer includes an intuitive drag-and-drop workflow builder that eliminates the need for deep coding expertise, making pipeline development accessible to a broader range of team members.

The tool comes pre-loaded with robust Spark operation blocks designed to handle common data transformations with minimal configuration. For data connectivity, DataNimbus offers out-of-the-box connectors for popular data sources, including Snowflake, Amazon S3, and SQL Server, streamlining the integration process with your existing data ecosystem. When custom logic is required, the platform enables users to seamlessly write and incorporate their own Python, SQL, or Scala code blocks directly into workflows. All of this is underpinned by native Databricks integration, ensuring seamless job orchestration and comprehensive monitoring capabilities.

The Power of Reusable Blocks in Pipeline Development

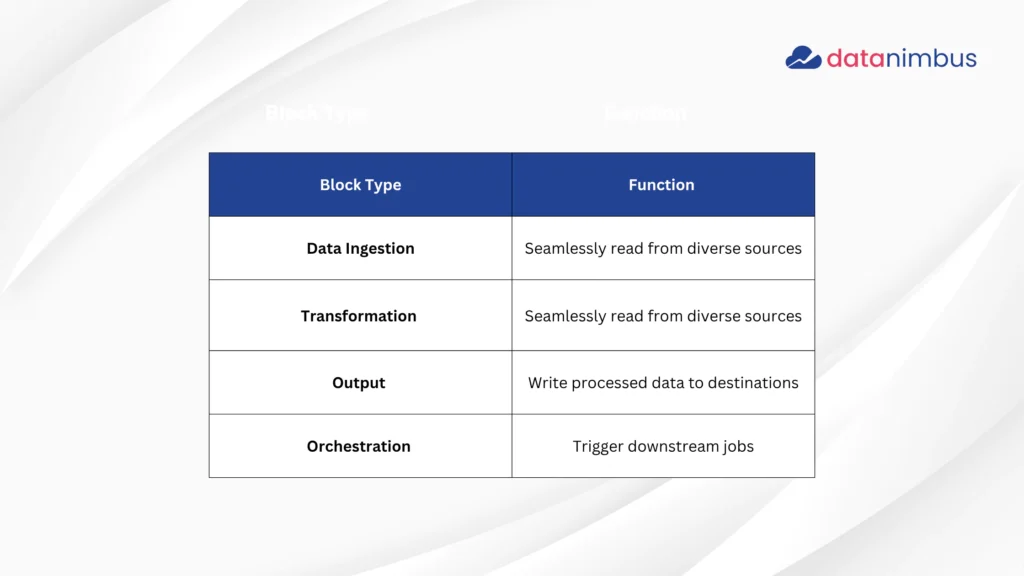

Reusable blocks span the entire data pipeline lifecycle:

Benefits of Standardization Through Blocks

Once configured and tested, a block can be reused across pipelines, delivering significant advantages:

- Reduced development time – Build pipelines in days instead of weeks

- Eliminated repetitive work – Reuse components across different pipelines

- Minimized errors – Leverage already tested components

- Simplified maintenance – Update once with version control

Bring Your Own Code (BYOC): Customize Without Constraints

Even with a strong library of reusable components, unique business logic or advanced use cases often require custom code. That’s why Designer includes Bring Your Own Code (BYOC)—a feature that allows users to insert custom logic into any pipeline.

What Users Can Bring

DataNimbus Designer supports multiple ways to customize pipelines:

- Python scripts for data processing

- SQL queries for specific transformations

- Scala functions for optimized Spark operations

- API calls to connect with external systems

The Benefits of Flexibility Through BYOC

This blend of visual components and code provides maximum control without disrupting workflow:

- Build exactly what you need – Full flexibility for custom requirements

- Reuse existing logic – Incorporate business logic that already exists

- Experiment quickly – Test new transformations safely and efficiently

- Maintain developer freedom – No constraints on innovation

Standardization Meets Flexibility: The Best of Both Worlds

Most data tools force a trade-off between ease of use and developer freedom. DataNimbus Designer offers the best of both. Business users can build workflows visually, while developers retain full control over logic and execution.

This balanced approach enables true collaboration across skill levels and roles. Business users can leverage the visual interface to build workflows using approved components, while developers maintain complete control over the underlying logic and execution through custom code blocks. Data governance teams benefit from the visibility and consistency that standardized approaches provide, while still enabling the innovation that custom development allows.

The result is a data engineering practice that combines the best of both worlds: the speed, consistency, and governance of standardized approaches with the flexibility, power, and expressiveness of custom development—all within a unified platform built specifically for the Databricks ecosystem.

Build Smarter, Not Harder With DataNimbus Designer

Data pipeline development doesn’t have to be complex or time-consuming. With DataNimbus Designer, you get a simple interface, reusable components, custom code support, and seamless Databricks integration. Whether building ETL pipelines or managing machine learning workflows, Designer helps you move faster and stay in control.

Ready to build smarter pipelines on Databricks? Try DataNimbus Designer and see the difference.